AutonomouslyFix Your Vulnerabilities

Move beyond alerting. Mondoo's intelligent agents discover, prioritize, and remediate vulnerabilities autonomously - reducing your attack surface at machine speed.

Why Teams Struggle with Remediation

Research shows most organizations still rely on manual processes that can't keep up with modern threats.

Manual Workflows

Alert Fatigue

Missing Guidance

Recurring Vulnerabilities

How Mondoo Helps You Fix 10x Faster

Quickly close security gaps with guided remediation, pre-tested code snippets, and autonomous patching.

AI-Powered Automation

Mondoo automates the entire vulnerability workflow from detection to resolution. No spreadsheets required.

Intelligent Prioritization

AI analyzes business context and exploitability to surface only the critical issues that matter.

Actionable Fix Instructions

Every finding includes detailed asset context, step-by-step remediation, and pre-tested code snippets.

Shift-Left Integration

Mondoo fixes issues in CI/CD pipelines and IaC, preventing vulnerabilities from recurring in deployments.

Smart Automation - with Human Control

Traditional vulnerability scanners tell you what's wrong. Mondoo's AI agents take action.

Discovery Agent

Automatically discovers and inventories all assets across cloud, on-premises, and SaaS environments.

Assessment Agent

Continuously scans for vulnerabilities, misconfigurations, and policy violations.

Prioritization Agent

Uses AI to analyze risk factors and prioritize findings by business impact.

Remediation Agent

Generates and applies fixes automatically with full rollback capability.

From Alert to Fixed in Minutes

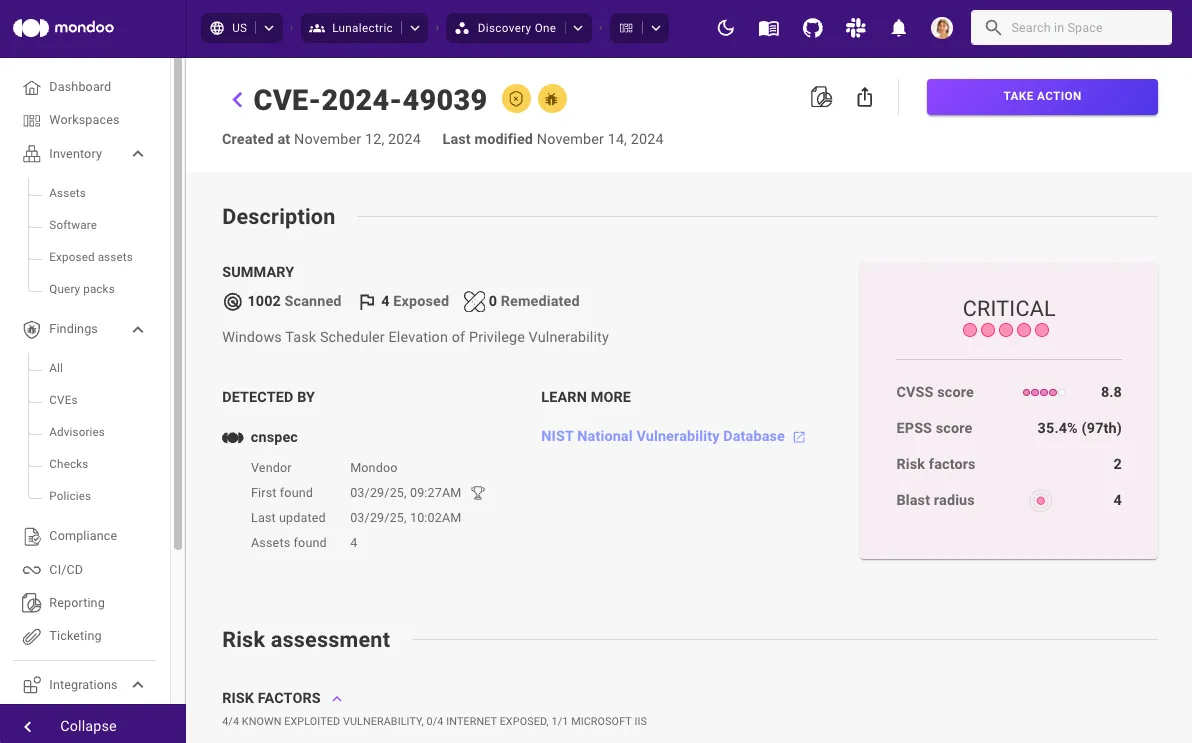

When a new CVE is published, Mondoo's agents immediately spring into action:

Detection

Instantly identify affected systems across your fleet

Analysis

Assess exploitability, blast radius, and business impact

Prioritization

Rank based on risk score and SLA requirements

Remediation

Generate fix code or apply patches automatically

Verification

Confirm fix success and update compliance status

Measurable Results

Real outcomes from real customers using Agentic Vulnerability Management

Reduction in Alerts

AI-powered prioritization focuses on the 10% of vulnerabilities that pose real risk.

Mean-Time to Remediation

Automated fixes and guided remediation dramatically reduce MTTR.

Faster Than Manual Work

Agentic automation handles repetitive tasks at machine speed.

Traditional VM vs. Agentic VM

Traditional VM

Agentic VM

Mondoo Platform FAQs

Ready for Autonomous Vulnerability Management?

See how Mondoo's AI agents can transform your security operations.